LLM Agents as Medical Assistants

Transcription, Speaker Detection, Template Population, Summary Generation, Diagnosis, Planning, Improvement Notes and more.

Our goal is to build an AI agent (I’m using this term rather loosely) that can sit in on a conversation between a doctor and a patient and do something useful with it. I've had the idea and the code for this for quite some time, but it was overly complicated, and to be honest, I could never get it to work really well without any fine-tuning. This was until a couple of days ago, when Gemini 2.5 Pro was released, which respect to audio, works really well without too much fidgeting.

So, what we want to make is not just about the transcription. We want a full pipeline that goes from raw audio to structured outputs that can help both clinicians and patients. Here’s the breakdown of what we’re building:

Transcription

First, we transcribe the conversation. Just get everything down, word for word.Speaker Detection

We also need to figure out who said what. Separating the doctor from the patient in the transcript.Transcript Validation

Once we have the transcript, we want to make sure it's accurate. This step is to confirm the AI Agent didn’t miss anything or mishear something.Template Population

After that, we take structured clinical templates, like GP encounter templates, and populate them using the transcription. This helps organize the raw conversation into a clinical format.Assessment and Plan

This is where we go beyond transcription. We want the AI agent to generate a assessment, and plan of what to do next. Something more analytical, not just repeating what was said, but thinking about it.Patient Inconsistencies

We want to surface any inconsistencies in what was said. Things that don’t quite match or might need a closer look.Constructive Feedback for the Doctor

Here, we look at how the conversation was handled. Did the doctor miss something? Was there something important the patient said that didn’t get followed up on? This is meant to help improve future interactions.Patient Summary

Finally, we want to generate a simple summary that can be sent to the patient. Something short and understandable. No jargon. Just what they need to know.

That's it. One AI agent. One conversation. And a set of outputs that cover everything from documentation to analysis to follow-up.

Ok, let’s now go through these steps one by one and see how they can be done, full code is in the Jupyter notebook at the bottom.

Transcription, Speaker Detection and Validation

In most projects I’ve worked on so far, transcription and speaker detection were done separately. But if we’re using a large language model, we can actually combine both steps in a single prompt. That simplifies things a lot.

Before we get into that, let me show you the dataset we're going to use. It's a dataset of simulated conversations from a Nature paper published a couple of years ago (Faiha Fareez et al.). We’ll be working with that. Below is an example recording (this recording is also used in all examples below).

The dataset contains 272 recordings. One of the good things about this dataset is that it’s not clean, the recordings are a bit messy, and the audio quality isn’t perfect, which is great for testing robustness.

The prompt we are going to use for the transcription is a bit more detailed than usual. That’s because we’re aiming for a very specific output format, and we want to guide the model properly. We’re also trying to handle edge cases. For example, if the speaker can’t be identified, the model should label it as Unknown. If something is inaudible, it should label it as Inaudible. The idea is to make sure the prompt accounts for anything that might go wrong so that the output stays consistent and useful. Full prompt below.

You are a transcription assistant for medical phone conversations.

Your task is to transcribe an audio recording of a phone call between a **doctor** and a **patient**.

Output a **term-based transcript**, where each speaker turn is clearly labeled as either **Doctor:** or **Patient:**, followed by exactly what they said.

Maintain correct punctuation and spelling. Do **not** summarize, interpret, or rephrase, just transcribe the spoken words.

If any word or sentence is unclear or unintelligible, insert a clear marker `[inaudible]` in place of the part that could not be transcribed.

If speaker identity is unclear, label that turn as:

Unknown: [spoken text]

**Example format:**

Doctor: Hello, this is Dr. Smith. How can I help you today?

Patient: Hi, I’ve been having pain in my lower back for the past few days.

Doctor: Okay, can you describe the pain for me?

Patient: It’s a sharp, stabbing feeling... mostly when I [inaudible] or twist too fast. Ok, at this point we can simply send the audio file plus the prompt above to Gemini and get the output below (you can find the full output in the Jupyter Notebook at the bottom of the post).

Doctor: what brings you in here today?

Patient: Yeah, I have this uh pain in my chest.

Doctor: Okay, and uh where is the pain exactly?

Patient: It's just right over on the on the left side.

Doctor: Okay. And uh when did this pain start?

Patient: Uh it started just 30 minutes ago.

Doctor: Okay. And did it just come on randomly or were you doing something strenuous?

Patient: Um, I was just shoveling the driveway and it it came on.

Doctor: Okay. And uh has that uh pain been getting worse at all over the last uh half an hour?

Patient: No, it just came on suddenly and it's um uh Sorry, yeah, the pain has been there this whole time and it's gotten worse um ever since it started.

Doctor: Okay. And how would you describe the pain? Is it kind of like an aching pain or is it sharp or tight tightness kind of pain? How would you describe it?

Patient: It feels dull. I feel like there's uh a lot of pressure on my chest.

Doctor: Okay. And how do you rate the pain right now on a scale of 0 to 10, zero being the least amount of pain you've felt in your life, 10 being the worst?

Patient: Uh, a seven.

Doctor: Seven? Okay. Have you had any uh similar episodes before?

Patient: No, I've never had any chest pain before.

Doctor: Okay. And is the pain just staying in the region, the left chest area that you mentioned, or is it traveling to any other part of your body?

Patient: No, I'm kind of just feeling it right here on the left side.

Doctor: Okay. Uh, is there anything that you do uh that makes the pain either get worse or go away or like get better?

...The second step is to use a different language model, ideally from another provider. We feed it both the transcript we already have and the original audio file, and ask it to check for any problems that could be medically important.

I'll show you the prompt that I’ve used for this kind of validation. This step is often skipped, or people try to validate using other methods. In my experience, even validating with the same LLM is good, or using a different one is even better. Note how the prompt is written. I had to include a bit more details because otherwise, the models didn’t do what I needed them to do.

You are a transcription validator.

You will be given:

1. An **audio file**, and

2. A **transcript** of that audio.

Your task is to **review the transcript against the audio** and identify any transcription errors.

You have to follow the audio exactly, do **not** summarize, interpret, or rephrase, just follow the spoken words exactly.

If you find a mistake, output the following for each error:

- **Line number** where the mistake occurs (based on the transcript input)

- **The incorrect text, the whole line with the incorrect text**

- **The corrected text** based on what was actually said in the audio, the whole line

If there are no mistakes, return: `No transcription errors found.`

At the end output are the mistakes found medically impactful, or just smaller errors (slight rephrasing, or missed random words, or stop words and similar).

**Format your output like this:**

Line 7

Incorrect: Patient: I started talking the medicine yesterday.

Correct: Patient: I started taking the medicine yesterday.

.

.

.

Medically impactful: YES (lines: 7)

Transcript: {the_transcript_from_the_previous_step}I've slightly modified the transcript and added line numbers at the beginning of each line. This way, the validator can point out issues by saying, for example, there's a problem on line 7.

Now, since our original transcript is basically perfect, I want to test whether this validation step actually works. So I’ve introduced a small error by replacing the word "vomiting," which appears twice in the transcript, with "fitting." That’s the kind of mistake that could realistically happen during transcription.

Let’s see if the model can catch that.

Line 19

Incorrect: Patient: Um, I think it's a bit, bit worse if I'm moving around or when I was walking in here, I think it it made it a bit worse, but uh, nothing seems to make it any better since it's starting.

Correct: Patient: Um, I think it's a bit, bit worse if I'm moving around or when I was walking in here, I think it it made it a bit worse, but uh, nothing seems to make it any better since it started.

Line 23

Incorrect: Patient: Um, I've felt a little bit uh shorter breath or having difficulty uh breathing since yesterday when the pa- or sorry, since the pain started, but um, just the difficulty breathing.

Correct: Patient: Um, I've felt a little bit uh shorter of breath or having difficulty uh breathing since yesterday when the pa- or sorry, since the pain started, but um, just the difficulty breathing.

Line 32

Incorrect: Doctor: No. Okay. Have you been having any symptoms like nausea or fitting or any fevers or chills?

Correct: Doctor: No? Okay. Have you been having any symptoms like nausea or vomiting or any fevers or chills?

Line 33

Incorrect: Patient: Um, no nausea or fitting, but um, I do feel a little bit hot today.

Correct: Patient: Um, no nausea or vomiting, but um, I do feel a little bit hot today.

Medically impactful: YES (lines: 32, 33)Ok, so we see that lines 32 and 33 are impactful, these are the ones where I replaced vomiting with fitting. We also see that there is a small mistake on Line 19 (last two words were originally “It’s starting” and the validator corrected it with “it started”), and line 23 (originally it had “shorter breath” the validator corrected it with “shorter of breath”). The lines 19 and 23 where not detected as medically significant.

Template Population

Okay, now we have the transcript. What would be even better is if, given a template like a GP consultation template, we could take the transcript and populate that template with the required information.

We’re going to do exactly that. We will give the transcript and template to the LLM, and ask it to fill it in.

The key here is to be very clear in the prompt. We need to tell the model not to add anything that isn't in the transcript, not to infer, not to rephrase. Just stick as closely as possible to what’s actually said. That way we stay close to the ground truth and avoid misinterpreting anything.

Because we have requested that the model does not infer anything, points 7, 8 and 9 will be empty as they were not discussed in the call. But, let’s have our agent do them also, we will allow it to do a bit of reasoning and not just transcribing.

Okay, the next thing we’re going to do is validate this output, just to be sure. For validation, I’ll provide the original audio and the populated template, and ask a our LLM to check if everything looks correct. It’s important that we use the original audio here, not any intermediate outputs, because that’s our ground truth.

Have a look at the Jupyter notebook for the full example. Here, I’ll just show the output. The validator model has identified one problem, which we can now go ahead and fix if we want (or again ask our agent to fix it).

Section: History of Presenting Complaint (HPC) - Relevant negatives

Error: The template states "no pain on breathing" as a relevant negative, but the patient did not explicitly deny this symptom.

Evidence: At [02:22], the doctor asks about "...a cough or difficulty breathing or any pain when you're breathing in or out?". The patient responds from [02:30] onwards, confirming difficulty breathing ("felt a little bit uh shorter breath or having difficulty uh breathing... since the pain started... just the difficulty breathing"), but never explicitly confirms or denies having pain on breathing. Stating "no pain on breathing" is an inference not directly supported by the audio.

Correction: The phrase "no pain on breathing" should be removed from the relevant negatives, or noted as 'not mentioned' rather than explicitly denied. A more accurate representation would be to only list symptoms explicitly denied.Assessment and Plan

For this section and the next three, I’ll keep things a bit shorter. By now, you probably get the general idea of how everything works. I’ll just explain what we’re doing in one or two sentences and then show the results.

If you’re interested in the details, the prompts, full inputs and outputs, check out the Jupyter notebook. Everything is there.

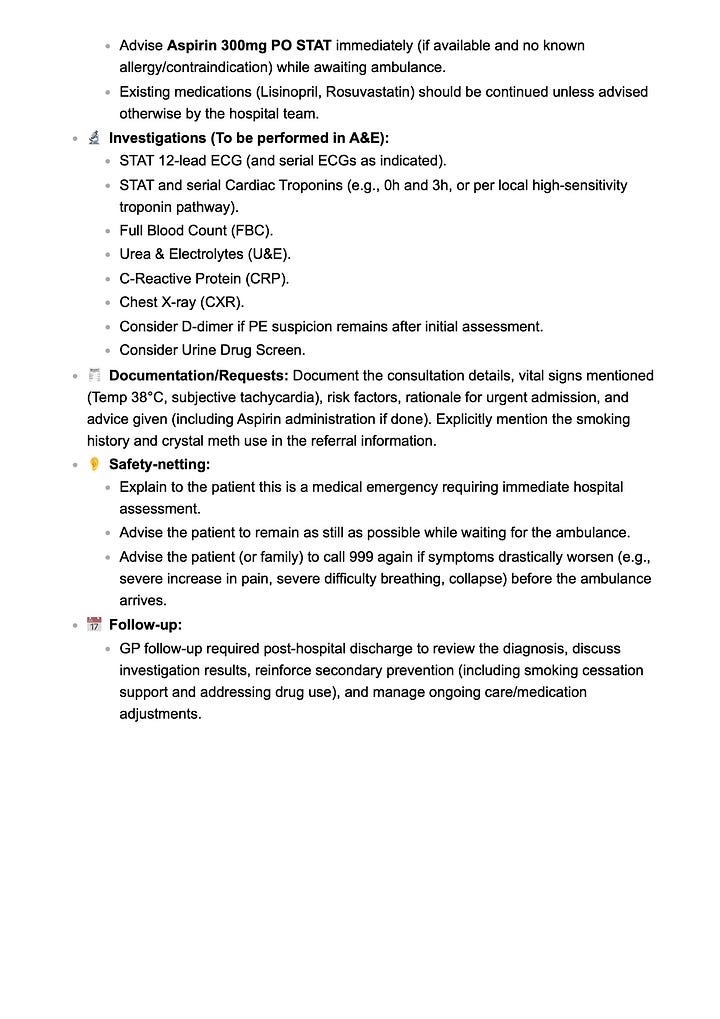

In this section, we want our agent to do a bit more reasoning. Not just sticking to the transcription, summarising, or populating templates, but going a step further into assessment, planning, and follow-up, the kind of thing a doctor would usually do.

Let’s try it out and see how it looks.

Constructive Criticism for the Doctor

Another thing I wanted to try, was to add a kind of review of the call. Something that could highlight what the doctor might have done better, or point out the strong and weak parts of the conversation. See the result below:

Patient Summary

Another one, how about a patient summary? Something we can easily provide to the patient that covers the main points in simple, easy-to-understand language.

Patient Inconsistencies

And lastly, how about patient inconsistencies? Places where the patient might have contradicted themselves, or where something didn’t quite seem right.

Conclusion

So that’s the full pipeline. We start with a raw conversation between a doctor and a patient, and step by step we build something meaningful out of it: transcription, speaker detection, validation, template population, reasoning for assessment and planning, reviewing the interaction, generating a simple patient summary, and even spotting inconsistencies.

The idea was to create an AI agent that isn’t just passively recording things but is actively supporting clinical work and improving the experience for both clinicians and patients. This blog is here to show what is possible, there is a lot of work (i.e. validation, testing, development) that needs to be done to make this real-world ready.

To wrap up, I want to note that this is a major simplification of the full pipeline and of what it takes to build LLM agents as medical assistants. There’s a huge amount of work needed to get something like this into production, especially when it comes to compliance with medical guidelines, patient privacy, and regulations. The goal of this post is to show case things, not to present a production-ready solution.

Jupyter notebook link

Thank you for reading,

Zeljko