A Large Language Model for Healthcare | NHS-LLM and OpenGPT

Introducing OpenGPT a framework for producing grounded domain specific LLMs, and NHS-LLM a conversational model for healthcare made using OpenGPT.

In 2022, we showcased Foresight a GPT model for patient timelines trained using real-world hospital data. Today we introduce OpenGPT and NHS-LLM. OpenGPT is a framework which facilitates the generation of grounded instruction-based datasets and supervised training of LLMs. And, NHS-LLM is a large language model (LLM) for healthcare made using OpenGPT. This work is part of the Foresight v2 project which aims to build a full-fledged, conversational LLM for healthcare (Figure 1).

Introduction

Creating an LLM for healthcare is not that different from creating an LLM for any other specialised domain. I’ve been recently exploring what steps are necessary to create an LLM for healthcare and ended up with the following four (Figure 1):

Collect a large-scale text dataset (e.g. the Open Internet) and train a GPT-like model on the next word prediction task (standard language modelling). For this step, I have always used an existing LLM (e.g. LLaMA) as training it from the ground up is very expensive.

Collect two types of instruction-based datasets, and fine-tune the model:

Freely accessible high-quality general instruction-based dataset (e.g. Open Assistant)

Specialised instruction-based datasets grounded in medical texts, health records and guidelines (this will be the focus of today, I will show how such a dataset can be automatically generated).

Collect and process hospital data into patient timelines, and fine-tune the model (see Foresight v1).

Align the model to clinical use cases with RLHF performed by healthcare professionals.

The rest of this blog post will focus on the second step (Figure 1. until the NHS-LLM part), or to be more precise on the automatic generation of grounded medical instruction-based datasets and supervised training of LLMs on such data.

Instruction based datasets

An instruction-based dataset is a collection of tasks and solutions that we can use to fine-tune a pre-trained Large Language Model. Examples of such instructions include:

Questions and Answers

Q: What is diabetes? A: Diabetes is a disease that occurs when your blood glucose, also called blood sugar, is too high.

Multiple Choice Questions:

Q: Choose the correct statement about laparoscopic liver resection efficacy from the following options: a) there is no difference in the overall patient survival rate or disease-free survival rate between laparoscopic liver resection and open resection, b) laparoscopic liver resection has a higher patient survival rate than open resection, c) laparoscopic liver resection has a lower disease-free survival rate than open resection. A: The correct statement about laparoscopic liver resection efficacy from the given options is option a).

Medical Tasks (e.g. Diagnosis)

T: Based on the given description, provide a diagnosis and recommend an appropriate treatment plan: A 25-year-old male presents with complaints of burning pain in the lower chest primarily after consuming a large meal or spicy food items. The pain resolves with over-the-counter antacids. He denies any shortness of breath or difficulty swallowing, and no weight loss is reported. S: Diagnosis: Gastroesophageal reflux disease (GERD), Treatment plan: …

Recently many have tried to automatically generate an instruction-based dataset using a Teacher (e.g. ChatGPT, GPT-4, GPT-3) to train their own conversational AI. The most famous is probably Alpaca done by Stanford, the approach they have used is as follows:

Prompt GPT-3 to generate a set of diverse tasks (prompt template). In this way generate a large dataset of tasks (52k in their example). A couple of examples of tasks generated by GPT-3:

What are the three primary colours?

Describe a time when you had to make a difficult decision.

How did Julius Caesar die?

For every one of the generated tasks, ask GPT-3 to solve it. In this way, we end up with 52k tasks and solutions. Solutions generated by GPT-3 for the above three tasks:

The three primary colours are red, blue, and yellow.

I had to make a difficult decision when I was working as a project manager at a construction company. I was in charge…

Julius Caesar was assassinated by a group of up to 60 conspirators, led by Gaius Cassius Longinus and Marcus Junius Brutus, in the Senate House on the Ides of March (15 March) of 44 BC.

If we wanted to apply this approach to healthcare, it is extremely simple and only requires a small prompt modification (full prompt). Change “You are asked to come up with a set of 20 diverse task instructions…” to “You are asked to come up with a set of 20 diverse task instructions in the field of medicine and healthcare…”. Example output for such a prompt:

Instruction: Generate a list of three common risk factors for developing type 2 diabetes.

Input: <noinput>

Output: Obesity, family history of diabetes, and physical inactivity are common risk factors for type 2 diabetes. The limitation of such an approach is that the dataset will have the same biases and knowledge limitations as ChatGPT, so if ChatGPT cannot answer a question, or has a bias the same will be reflected in the new model. The diversity of the dataset is also limited by ChatGPT, so if ChatGPT does not know about a disease or never creates a task/solution pair for a certain disease, our dataset will not have any info either.

While making Foresight v2 I was trying to circumvent this limitation, while still using a model like ChatGPT to train at least the base layer of my own model. The solution is rather straightforward and allows us to create a grounded model that possibly has more knowledge and fewer biases than ChatGPT itself for a certain domain.

To make this possible and easy to do, I’ve made OpenGPT - a framework for creating grounded instruction-based datasets and fine-tuning LLMs. Below we will see how this can be done for the healthcare domain, but a similar approach can be done for any other domain (finance, law, agriculture, etc.).

OpenGPT - Dataset Creation

We start by collecting a sizeable high-quality dataset in the healthcare domain. In my case, I scraped the Patient Information section of the NHS.UK website which contains definitions of diseases together with the corresponding symptoms and medications. In total, we collected the text from 2354 pages.

Next, we write a prompt where we ask a Teacher model like ChatGPT to generate instructions (tasks) based on a context (the context is the text scraped from the NHS.UK website, e.g. Hypertension). This is the most important part and the main difference from what was done for Alpaca by Stanford. Providing a context and prompting a Teacher model to generate instructions based on the context, grounds the tasks (and more importantly solutions) in accurate, non-biased data.

{context} - <e.g all the text from the Acromegaly page>

----------------

You are asked to come up with a set of 5 diverse task instructions in the field of medicine and healthcare.

All the task instructions have to be based on the context provided above, and cannot require external knowledge or information.

These tasks will be used to quiz an AI Assistant on the knowledge of the topic above.

Here are the requirements:

1. Try not to repeat the verb for each instruction to maximize diversity.

2. The language used for the instruction also should be diverse. For example, you should combine questions with imperative instructions.

3. The type of instructions should be diverse. Create instructions that include diverse kinds of tasks like step-by-step reasoning, multiple-choice-questions, open-ended generation, classification, editing, complex medical questions, etc.

4. Every instruction has to be self-contained, all the information necessary to solve the task has to be part of the instruction. For example, the instructions should never say "From the provided context" or "given the context" or "based on the context" or "based on the information".

List of 5 task instruction (every task has the following fields: Task Number:, Instruction:, Solution:):For example, given the context of hypertension, the above prompt generates the following example:

Task Number: 1

Instruction: What is considered a high blood pressure reading?

Solution: Blood pressure readings are recorded with 2 numbers. The systolic pressure (higher number) is considered high when it is above 140, while the diastolic pressure (lower number) is high when it is above 90. However, readings between 120/80mmHg and 140/90mmHg could mean you're at risk of developing high blood pressure if you do not take steps to keep your blood pressure under control. The ideal blood pressure is usually between 90/60mmHg and 120/80mmHg.

.

.

.The Instruction and Solution are in this case grounded in the information available on the NHS.UK website. It is up to us now to collect a good domain-specific dataset and write a prompt so that the instruction/solution pairs are diverse and cover a wide range of use cases.

Prompt Engineering

The act of changing the prompt to get an LLM (e.g. ChatGPT) to do what we want is called prompt engineering. I’ve been doing this for around 2 months now, and honestly, it is more akin to astrology than engineering.

It is not easy to get the models (ChatGPT, GPT-4) to follow more complex and detailed instructions consistently, often 80% of the output is fine, but in 20% of cases, the model does something wrong. For example, when prompting ChatGPT to create a list of Instructions/Solutions given a context, it will occasionally reference the context even though I explicitly wrote to not do that (Figure 2. first task).

Some things I’ve found that work well when prompting OpenAI models (for ChatGPT and GPT-4, March 2023):

Examples - instead of trying to explain a difficult instruction in words it was usually better to provide an example.

Bullet lists - providing instructions as a numbered/bullet list usually worked better than providing all the instructions in one big block of text.

Positives over Negatives - saying what to do was usually better than saying what not to do.

Concise - do not repeat instructions, or write the same thing in many different ways, write it once and be extremely precise.

Model training

The model we’ve trained is called NHS-LLM. It builds upon the LLaMA 13B made available by Meta. Unfortunately because of the licence, we cannot make it public, but we hope to train one of the open-source models soon and publish it.

The model was trained using OpenGPT and all scripts for training are available in the repo. I will skip the implementation details and data preparation as all is in the repo and the accompanying Jupyter notebooks.

The datasets we have used are:

NHS UK Conditions via OpenGPT (Available for download in the OpenGPT repo).

NICE Guidelines via OpenGPT (The dataset will be made public with Foresight v2).

MIMIC-III Timelines from Foresight v1 (represented as text, and not SNOMED concepts), via OpenGPT.

Open Assistant as the general dataset.

The training was done on 64 A100 (80GB) made available by Nvidia via the Cambrdige-1 supercomputer. But, the training can also easily be done on just one A100, our main reason for using more was because of the large number of tests we’ve done.

Results

We are presenting results from the qualitative analysis, more results on hospital data and patients will be available in the near future (part of the Foresight v2 paper).

The questions we are using were written by clinicians to test the performance of the model. The output was generated after one run of the NHS-LLM and whatever was the first output we’ve taken it. We did not try to re-generate or get better answers (as such some answers might not be perfect, or can contain small mistakes).

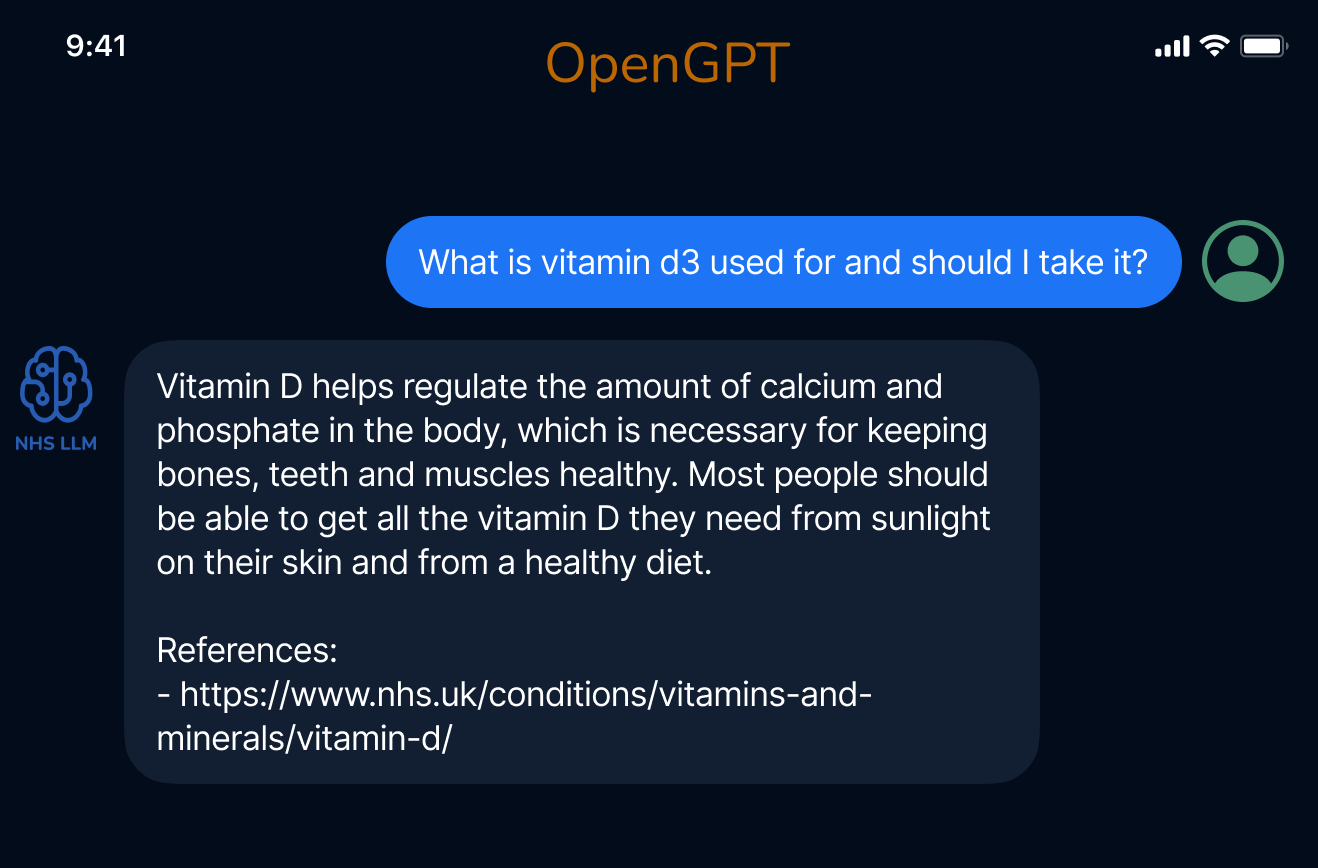

This was obviously a very easy task, but even here we can see that the model is perfectly aligned with what is written on the NHS UK website and even provides a reference. Next, a slightly more complex example:

The answer from the model might be a bit drastic (given the circumstances), but still appropriate, and again a correct reference is provided (we can also be sure that such an example did not exist in the supervised training dataset, so the model is able to generalise).

The following example is one where ChatGPT and GPT-4 fail and do not correctly diagnose pregnancy (more info on using ChatGPT for diagnosis). NHS-LLM solves this perfectly and puts Pregnancy as the most likely diagnosis:

And a couple more examples, this time related to epilepsy.

Conclusion

The current NHS-LLM model is not as verbose as ChatGPT or similar models, but from the questions we’ve tested it on, it shows promising results and even outperforms ChatGPT on various medical tasks. More validation is to come, including validation on hospital data and patient timelines.

There are still some challenges left to be addressed. For example, the questions and answers generated via OpenGPT will be always answerable self-contained questions, and this does not really depict a real conversation or real questions from users. People will often ask questions that do not have answers, or ask incomplete questions that cannot be answered without more details. More on this and how it can be resolved in a future post.

Finally, this approach is the first step in creating a full-fledged conversational LLM for healthcare. It is still experimental and should be handled with care, more work is needed to show if this is the right direction and in fact, if it can be used as a stepping-stone for creating an LLM for healthcare.

As part of this work, we are making three datasets available (see OpenGPT GitHub):

NHS UK Q/A, 24665 Q/A pairs - A dataset of questions and answers generated via OpenGPT for all conditions found on the NHS UK website.

NHS UK Conversations, 2354 Conversations - A dataset of conversations between an AI-Assitant and a User, generated via OpenGPT and grounded in the data available on the NHS UK website.

Medical Task/Solution, 4688 pairs generated via OpenGPT using the GPT-4 model as a teacher.

good work~

Medical diagnosis often faces language barriers between countries. Does this model apply to non-English-speaking countries? How does the approach address the language limitations?